| .github | ||

| parsers | ||

| screenshots | ||

| static/styles | ||

| templates | ||

| .gcloudignore | ||

| .gitignore | ||

| app.yaml | ||

| deploy.sh | ||

| LICENSE | ||

| main.py | ||

| README.md | ||

| requirements.txt | ||

This program parses recipes from common websites and displays them using plain-old HTML.

You can use it here: https://www.plainoldrecipe.com/

Screenshots

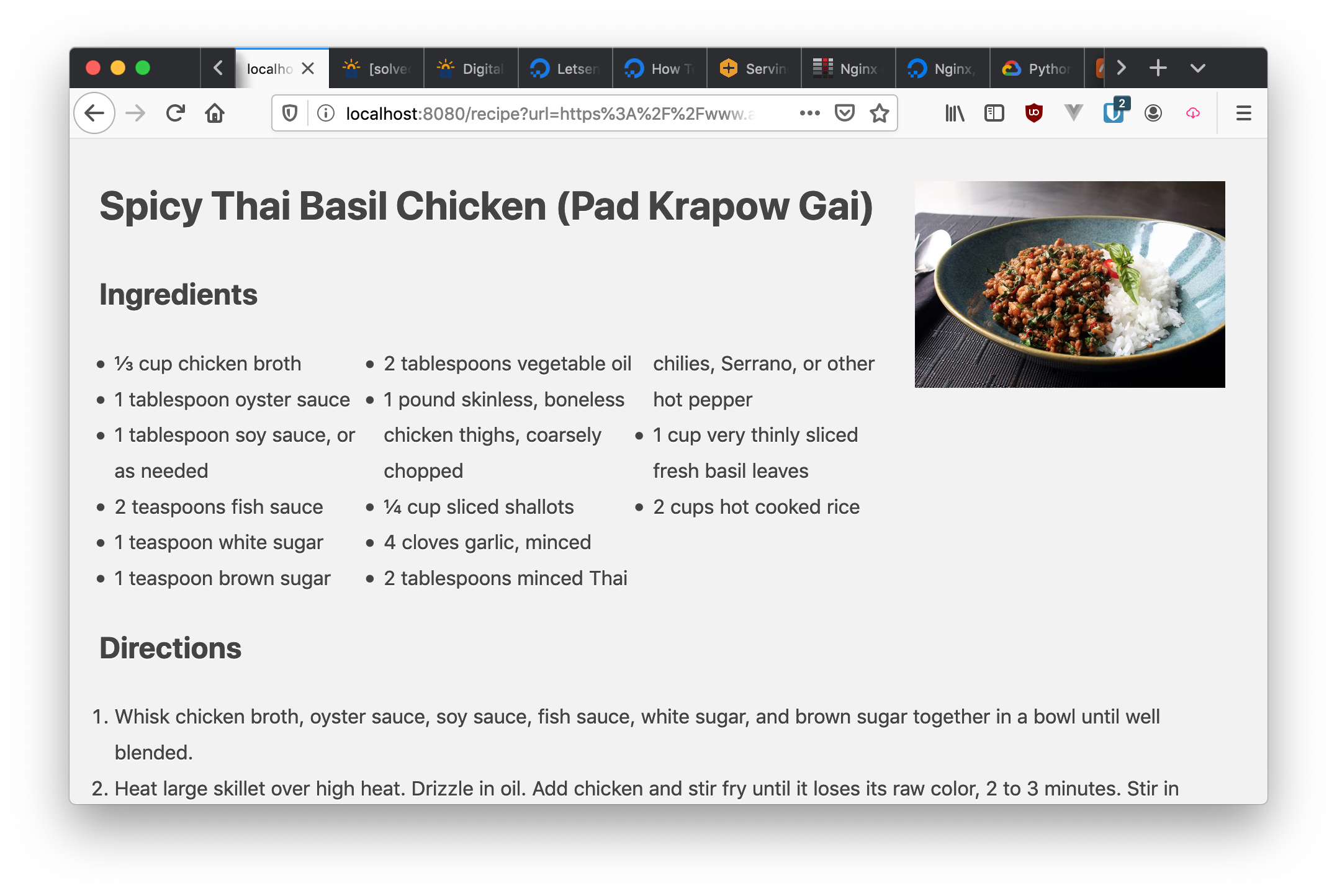

View the recipe in your browser:

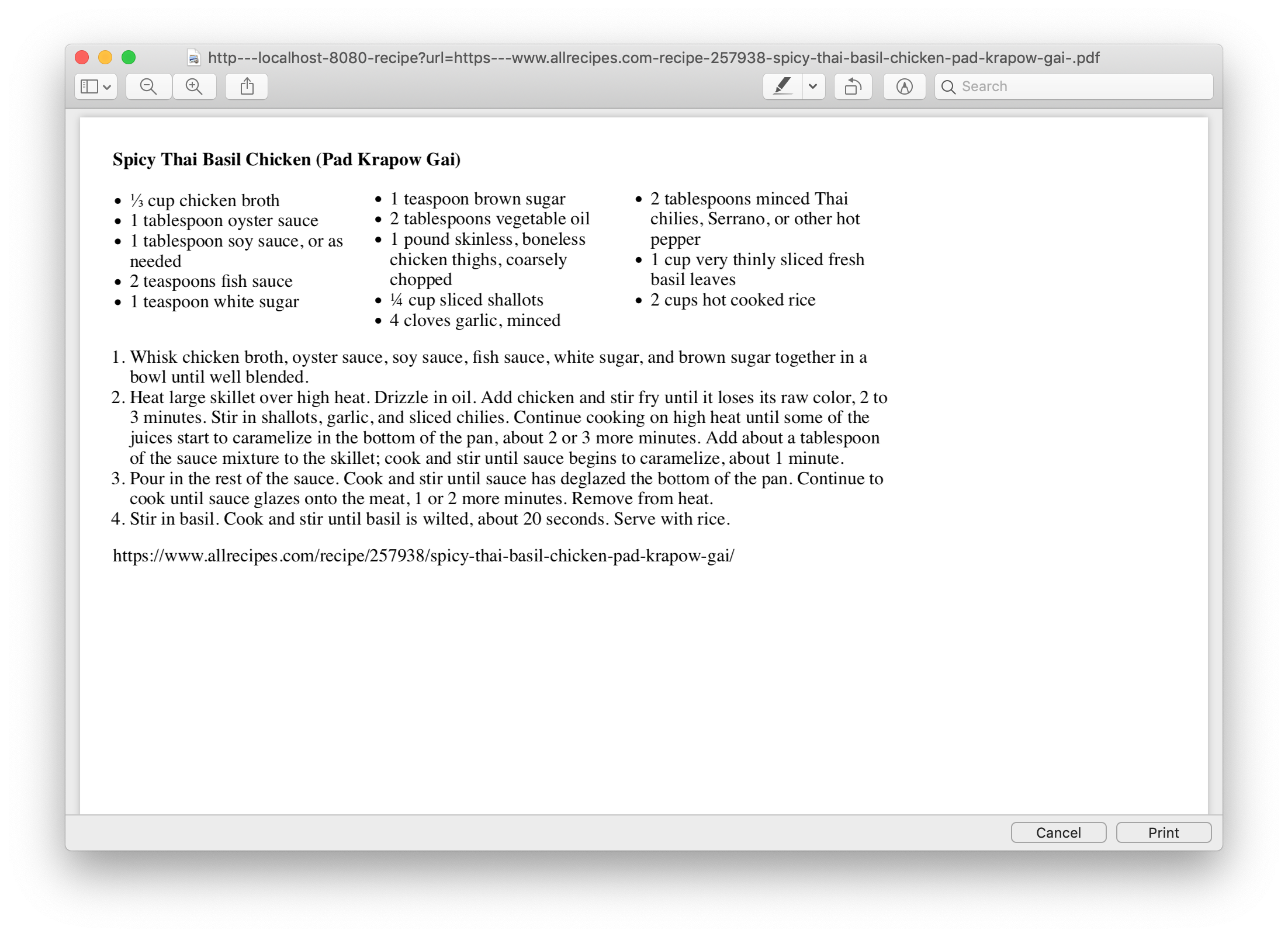

If you print the recipe, shows with minimal formatting:

Deploy

Run deploy.sh

Acknowledgements

Contributing

-

If you want to add a new scraper, please feel free to make a PR. Your diff should have exactly two files:

parsers/__init__.pyand add a new class in theparsers/directory. Here is an example of what your commit might look like. -

If you want to fix a bug in an existing scraper, please feel free to do so, and include an example URL which you aim to fix. Your PR should modify exactly one file, which is the corresponding module in the

parsers/directory. -

If you want to make any other modification or refactor: please create an issue and ask prior to making your PR. Of course, you are welcome to fork, modify, and distribute this code with your changes in accordance with the LICENSE.

-

I don't guarantee that I will keep this repo up to date, or that I will respond in any sort of timely fashion! Your best bet for any change is to keep PRs small and focused on the minimum changeset to add your scraper :)

Testing PRs Locally

git fetch origin pull/ID/head:BRANCHNAME